0.1.7

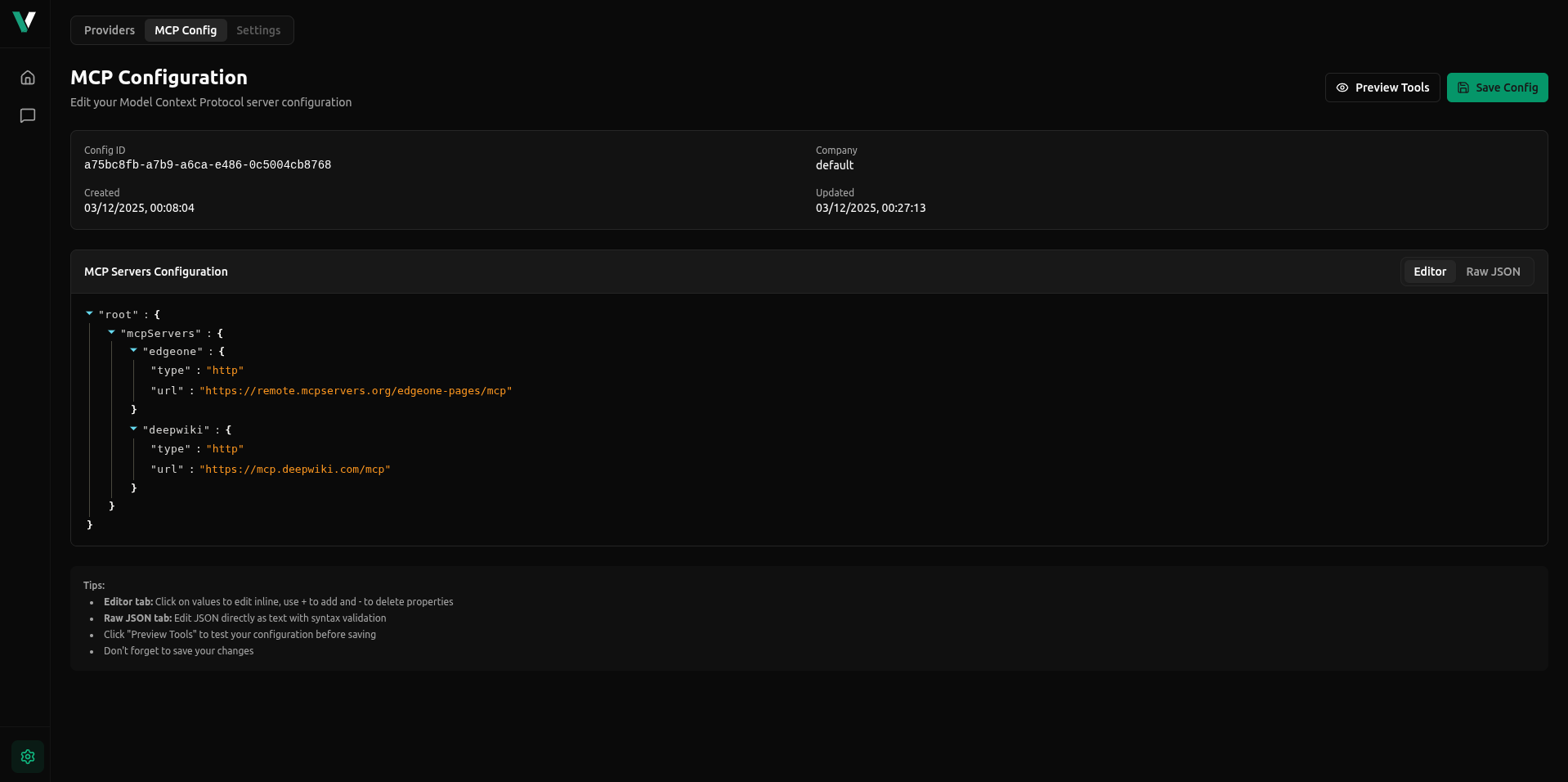

Introducing MCP Support, enabling seamless integration with Model Context Protocol servers. Connect your AI models to external tools, APIs, databases, and services through HTTP, SSE, or WebSocket transports. vLLora automatically discovers MCP tools, executes tool calls on your behalf, and traces all interactions—making it easy to extend your models with dynamic capabilities.

Other improvements in this release

- Full MCP server support with HTTP, SSE, and WebSocket transports, enabling dynamic tool execution and external system integration

- Embedding model support for Bedrock, Gemini, and OpenAI with comprehensive tracing and cost tracking

- Enhanced routing with conditional strategies, fallbacks, and maximum depth limits for complex request flows

- Improved cost tracking with cached input token pricing and enhanced usage monitoring across all providers

- Response caching improvements for better performance and cost optimization

- Thread handling with service integration and middleware support for conversation management

- Multi-tenant OpenTelemetry tracing with tenant-aware span management

- Claude Sonnet 4.5 model support

- Virtual model versioning via

model@versionsyntax for flexible model selection - Variables support in chat completions for dynamic prompt templating

- Enhanced model metadata with service level, release date, license, and knowledge cutoff information

- Google Vertex AI model fetching and integration

- Improved span management with RunSpanBuffer for efficient trace processing

Features

- feat: Add JSON configuration with 8MB limit and clean up unused imports in http.rs (@karolisg) (4689128)

- feat: Introduce RunSpanBuffer for efficient span management and update trace service to utilize it (@karolisg) (483148c)

- feat: Update provider credentials handling to include GatewayTenant in update_provider_key function (@karolisg) (60e4fee)

- feat: Introduce thread handling module with service and middleware integration (@karolisg) (a5a9ccb)

- feat: add option to control UI opening on startup and update configuration handling (@karolisg) (e5ecdf4)

- #175 Feat: Tracing MCP server (@karolisg)

- feat: Add Slack notification for new brew releases (@karolisg) (a1632b2)

- feat: add Slack notification job to GitHub Actions workflow for release updates (@karolisg) (cf7b148)

- feat: Support claude sonnet 4.5 (@karolisg) (931dd56)

- feat: add cost field to span model for enhanced tracking capabilities (@karolisg) (64f3a7b)

- feat: add message_id field to span model and track elapsed time for processing streams across models (@karolisg) (d3dda6d)

- feat: add model and inference model names to tracing fields in TracedEmbedding (@karolisg) (6c096ca)

- feat: add LLMStartEvent logging for Bedrock, Gemini, and OpenAI embedding models (@karolisg) (f24fea3)

- feat: capture spans for Bedrock, Gemini, and OpenAI embeding models (@karolisg) (7197ae6)

- feat: Replace custom error handling with specific ModelError variants (@karolisg) (7839211)

- feat: Support base64 encoding in embeddings (@karolisg) (def4f90)

- feat: Add methods for token pricing in ModelPrice enum (@karolisg) (631664a)

- feat: Enhance OpenAI embeddings support with Azure integration and improve error handling (@karolisg) (16b401d)

- feat: Add Bedrock embeddings support and enhance error handling (@karolisg) (4d7858b)

- feat: Introduce Gemini embeddings model and enhance provider error handling (@karolisg) (785371a)

- feat: Add is_private field to model metadata for enhanced privacy control (@karolisg) (217ee73)

- feat: Add new embedding models and enhance model handling (@karolisg) (9ffa27a)

- #129 feat: Fetch models from Google Vertex (@karolisg)

- feat: Add async method to retrieve top model metadata by ranking (@karolisg) (6f45d3d)

- feat: Add model metadata support to chat completion execution (@karolisg) (e4d1b8f)

- feat: Add support for roles in ClickhouseHttp URL construction (@karolisg) (dabdfdd)

- feat: Extend ChatCompletionMessage struct to include optional fields for tool calls, refusal, tool call ID, and cache control (@karolisg) (529d4ea)

- feat: Add build_response method to AnthropicModel for constructing MessagesResponseBody from stream data (@karolisg) (1c42ef5)

- feat: Enhance GenerateContentResponse structure to include model_version and response_id, (@karolisg) (3d6d572)

- feat: Implement build_response method to construct CreateChatCompletionResponse from stream data for tracing purpose (@karolisg) (e305a0b)

- feat: Extend ModelMetadata with new fields for service level, release date, license, and knowledge cutoff date (@karolisg) (797aff5)

- feat: Add serde alias for InterceptorType Guardrail to support legacy "guard" identifier (@karolisg) (9dc6c43)

- #116 feat: Implement conditional routing strategy (@karolisg)

- feat: Integrate cache control logic into message content handling in MessageMapper (@karolisg) (416cb1d)

- feat: Update langdb_clust to version 0.9.4 and enhance token usage tracking in cost calculations (@karolisg) (489a4a9)

- feat: Add benchmark_info field to ModelMetadata (@karolisg) (1b7904f)

- feat: Introduce CacheControl struct and integrate it into message mapping for content types (@karolisg) (cc715da)

- feat: Add support for cached input token pricing in cost calculations and update related structures (@karolisg) (f1d4077)

- feat: Return template directly if no variables are provided in render function (@karolisg) (6adaf12)

- feat: Enhance logging by recording request payloads in Gemini client (@karolisg) (86a9875)

- feat: Add API_CALLS_BY_IP constant for enhanced rate limiting functionality (@karolisg) (5e5958c)

- feat: Add optional user_email field to RequestUser struct (@karolisg) (982c240)

- feat: Implement maximum depth limit for request routing in RoutedExecutor (@karolisg) (357ffca)

- feat: Handle max retries in request (@karolisg) (31d9d41)

- feat: add custom event for model events (@karolisg) (7299233)

- feat: Support project traces channels (@karolisg) (ce0efef)

- feat: add run lifecycle events and fix model usage tracking (@karolisg) (31875af)

- feat: implement tenant-aware OpenTelemetry trace (@karolisg) (ee48ae3)

- #92 feat: Basic responses support (@karolisg)

- #86 feat: Support http streamable transport (@karolisg)

- feat: Add options struct for prompt caching (@karolisg) (61181bc)

- feat: add description and keywords fields to thread (@karolisg) (11411ec)

- feat: Add key generation for transport type (@karolisg) (3238632)

- feat: add version support for virtual model retrieval via model@version syntax (@karolisg) (b1f4045)

- #73 feat: Add variables field to chat completions (@karolisg)

- #72 feat: Enhanced support for MCP servers (@karolisg)

- feat: Support azure url parsing and usage in client (@karolisg) (cb4c665)

- feat: Store tools results in spans (@karolisg) (4f8deff)

- feat: Store openai partner moderations guard metadata (@karolisg) (0415257)

- feat:Support openai moderation guardrails (@karolisg) (0285528)

- feat: Return 446 error on guard rejection (@karolisg) (e0dd668)

- #54 feat: Support custom endpoint for openai client (@karolisg)

- #46 feat: Implement guardrails system (@VG)

- feat: Support multiple identifiers in cost control (@karolisg) (302a84c)

- feat: Support all anthropic properties (@karolisg) (59dec4b)

- feat: Add support of anthropic thinking (@karolisg) (ff9815a)

- feat: Add extra to request (@karolisg) (a9d3c32)

- #29 feat: Support search in memory mcp tool (@VG)

- #28 feat: Use time windows for metrics (@karolisg)

- feat: Refactor targets usage for percentage router (@karolisg) (0445101)

- #21 feat: Support langdb key (@karolisg)

- #20 feat: Integrate routed execution with fallbacks (@karolisg)

- feat: Add missing gemini parameters (@karolisg) (5f1d15e)

- #15 feat: Improve UI (@karolisg)

- feat: Add model name and provider name to embeddings API (@karolisg) (452cb91)

- feat: Print provider and model name in logs (@karolisg) (3403968)

- #4 feat: Implement tui (@VG)

- #3 feat: Build for ubuntu and docker images (@VG)

- feat: Support .env variables for config (@karolisg) (65561e9)

- feat: Use in memory storage (@karolisg) (75bf2a1)

- feat: implement mcp support (@VG) (a97bc68)

- feat: Add rate limiting (@karolisg) (7066d87)

- feat: Add cost control and limit checker (@karolisg) (1300266)

- feat: Use user in openai requests (@karolisg) (96213dc)

- feat: Add api_invoke spans (@karolisg) (329ace4)

- feat: Enable otel when clickhouse config provided (@karolisg) (edbd16c)

- feat: Add database span writter (@karolisg) (72bd326)

- feat: Add clickhouse dependency (@karolisg) (78fe8ca)

Bug Fixes

- fix: Change From implementation to TryFrom for GenericGroupResponse and handle errors with GatewayError (@karolisg) (ff34d81)

- fix: Update tools definition to use Option for type field (@karolisg) (1b8d21b)

- fix: Update trace ID and span ID conversion in span_to_db_trace function (@karolisg) (ccc8881)

- fix: Add serde aliases for ListGroupQueryParams and GroupByKey fields (@karolisg) (09b35e7)

- fix: Fix filter params aliases (@karolisg) (db0219f)

- fix: Support postgres table for mcp configs (@karolisg) (a6235b7)

- fix: correct version formatting in check_version function for header and update check (@karolisg) (554b79d)

- fix: format version header in session check_version function (@karolisg) (1aa7c88)

- #178 fix: enhance error logging in Actix OTEL middleware (@karolisg)

- fix: Invalidate Rust build cache when UI content changes (@duonganhthu43) (1e8dc3f)

- fix: fmt (@duonganhthu43) (a2d3c3e)

- fix: Use tool.name instead of label in tools span (@karolisg) (a3f2e6f)

- fix: Send only part of headers to session track (@karolisg) (137da3d)

- fix: build ui part (@duonganhthu43) (c1ac76a)

- fix: enhance usage tracking by adding raw_usage field and implementing content comparison in Message struct (@karolisg) (309a772)

- fix: Improve error message for invalid ModelCapability (@karolisg) (25973bf)

- fix: Update token calculation in OpenAIModel to include reasoning tokens (@karolisg) (7f54726)

- #140 fix: Add support for custom headers in transports. Fixes #135 (@karolisg)

- fix: Update routing logic to always return true for ErrorRate metric when no metrics are available (@karolisg) (ead8fc0)

- fix: Update apply_guardrails call to use slice reference for message to ensure proper handling (@karolisg) (f00c778)

- fix: Improve error handling in stream_chunks by logging send errors for GatewayApiError (@karolisg) (74c6d54)

- fix: Update GatewayApiError handling for ModelError to return BAD_REQUEST for ModelNotFound (@karolisg) (bacb9ef)

- fix: Correct input token cost calculation by ensuring cached tokens are properly subtracted (@karolisg) (f42a00b)

- fix: Workaround xai tool calls issue (@karolisg) (da836f1)

- fix: Add workaround for XAI bug (@karolisg) (aeef458)

- fix: Handle template error during rendering (@karolisg) (45d960c)

- fix: Fix operation name for model spans (@karolisg) (ba68f69)

- fix: Fix retries handle in llm calls (@karolisg) (7c5354b)

- fix: Fix retries logic (@karolisg) (3edfae3)

- fix: Handle thought signature in gemini response (@karolisg) (f1e1501)

- fix: Fix duplicated tools labels in gemini tools spans (@karolisg) (233b100)

- fix: Properly handle model calls traces in gemini (@karolisg) (f57d522)

- fix: Empty required parameters list (@karolisg) (4e887b3)

- fix: Fix required default value (@karolisg) (c2ebf62)

- fix: Fix tracing for cached responses (@karolisg) (b9422cd)

- fix: handle cache response errors gracefully in gateway service (@karolisg) (38eba17)

- fix: Handle nullable types in gemini (@karolisg) (4a5a3ad)

- fix: Fix nested gemini structured output schema (@karolisg) (e97a592)

- fix: Fix gemini structured output generation (@karolisg) (45b376a)

- Fix: Fix gemini tool calls (@karolisg) (5331e2c)

- fix: Store call information in anthropic span when system prompt is missing (@karolisg) (c856d9a)

- fix: Handle empty arguments (@karolisg) (694a040)

- fix: Fix tags extraction (@karolisg) (732d872)

- fix: Add index to tool calls (@karolisg) (1963d20)

- fix: Support proxied engine types (@karolisg) (30d04b0)

- fix: Fix nested json schema (@karolisg) (2fcecc7)

- fix: Fix models name in GET /models API (@karolisg) (af93b7c)

- #38 fix: Fix ttft capturing (@karolisg)

- fix: Fix gemini call when message is empty (@karolisg) (77dfb2e)

- fix: Return formated error on bedrock validation (@karolisg) (b36434a)

- fix: Create secure context for script router (@karolisg) (5adfcd7)

- fix: Fix serialization of user properties (@karolisg) (af3e20b)

- fix: Fix routing direction for tps and requests metrics (@karolisg) (74317bb)

- fix: Return authorization error on invalid key (@karolisg) (08b870c)

- fix: Fix map tool names to labels in openai (@karolisg) (f434dd7)

- #26 fix: Fix langdb config load (@karolisg)

- fix: Add router span (@karolisg) (789261c)

- #22 fix: Fix response format usage (@karolisg)

- fix: Fix model name in models_call span (@karolisg) (ba57d2b)

- fix: Store inference model name in model call span (@karolisg) (a446bee)

- fix: Fix tags in tracing (@karolisg) (f0f6ffd)

- Fix: Fix provider name in tracing (@karolisg) (8754804)

- #18 fix: Fix provider name (@karolisg)

- fix: Improve error handling in loading config (@karolisg) (d4fbc25)

- fix: Fix tracing for openai and deepseek (@karolisg) (679aaa8)

- fix: Fix connection to mcp servers (@karolisg) (562852d)

- fix: Fix tonic shutdown on ctrl+c (@karolisg) (d83828f)

Code Refactoring

- refactor: Remove mcp_server module and associated TavilySearch implementation (@karolisg) (ae7dbb1)

- refactor: Remove unnecessary logging in thread service middleware (@karolisg) (f775dc9)

- refactor: Migrate codebase to vllora functionality (@karolisg) (78575b3)

- refactor: Enhance embedding handling and introduce new model structure (@karolisg) (013b214)

- refactor: Remove unused EngineType variants from the engine module (@karolisg) (69e12a7)

- refactor: Clean up code formatting and remove unused dependency (@karolisg) (7b19a1b)

- refactor: Update model handling and enhance Azure OpenAI integration (@karolisg) (868ffef)

- refactor: Consolidate CredentialsIdent usage across modules and enhance cost calculation (@karolisg) (8cd96e8)

- refactor: Integrate price and credentials identification into model handling (@karolisg) (88fbae0)

- refactor: Update ModelIOFormats enum to include PartialEq derive and remove Bedrock model file (@karolisg) (65152c3)

- refactor: Introduce BedrockCredentials type and update AWS credential handling (@karolisg) (fa28214)

- refactor: Enhance metric routing to include default metrics for missing models (@karolisg) (54c7e2c)

- refactor: Update default context size in Bedrock model provider to zero (@karolisg) (1211217)

- refactor: Enhance Bedrock model ID formatting with region prefix (@karolisg) (8f80b92)

- refactor: Update Anthropic model to handle optional system messages (@karolisg) (b3859a8)

- refactor: Simplify message sending in stream_chunks function (@karolisg) (00b657e)

- refactor: Enhance Bedrock model ID handling with version replacement (@karolisg) (ec5ab40)

- refactor: Update Bedrock model provider to skip specific model ARNs and use model ARN for metadata (@karolisg) (d4034c1)

- refactor: Simplify Bedrock model ID handling and remove unused model ARN assignment (@karolisg) (968ba0a)

- refactor: Improve AWS region configuration handling in get_user_shared_config (@karolisg) (36c62c9)

- refactor: Add warning logs for Bedrock model name during conversation (@karolisg) (c4a7ed0)

- refactor: Remove debug logging for Bedrock client credentials (@karolisg) (5991121)

- refactor: Update BedrockModel to utilize ChatCompletionMessageWithFinishReason and adjust region configuration (@karolisg) (b7fb560)

- refactor: Remove unnecessary error logging in GeminiModel response handling (@karolisg) (343df18)

- refactor: Enhance OpenAIModel response handling with finish reason and usage tracking (@karolisg) (91aaf32)

- refactor: Update ChatCompletionMessage to include finish reason and adjust related model implementations (@karolisg) (f884c47)

- refactor: Simplify match expression for ModelError in GatewayApiError and streamline error logging in stream_chunks (@karolisg) (68deec6)

- refactor: Remove redundant logging of system messages in AnthropicModel (@karolisg) (f6d3a73)

- refactor: Simplify ProjectTraceMap type by removing receiver from tuple (@karolisg) (ea1c088)

- refactor: Add alias for InMemory transport type in McpTransportType enum (@karolisg) (04710a1)

- refactor: Clean up unused app_data references in ApiServer and adjust telemetry imports (@karolisg) (a40a683)

- refactor: Remove unused TraceMap references from RoutedExecutor and related modules (@karolisg) (2fe3067)

- refactor: integrate InMemoryMetricsRepository into routing logic (@karolisg) (329e153)

- refactor: split chat completion streaming into separate chunks for delta, finish reason and usage (@karolisg) (c67d478)

- refactor: Fix use of variables (@karolisg) (5e5ad6b)

- refactor: rename PromptCache to ResponseCache for better clarity and consistency (@karolisg) (bc9677f)

- refactor: move caching logic to dedicated cache module and update response types (@karolisg) (708797e)